Michael Tanenhaus | |

|---|---|

| |

| Born | |

| Alma mater | University of Iowa Columbia University |

| Scientific career | |

| Institutions | Antioch College University of Rochester Wayne State University |

Michael Tanenhaus is an American psycholinguist, author, and lecturer. He is the Beverly Petterson Bishop and Charles W. Bishop Professor of Brain and Cognitive Sciences and Linguistics at the University of Rochester. From 1996 to 2000 and 2003–2009 he served as Director of the Center for Language Sciences at the University of Rochester.

Tanenhaus's research focuses on processes which underlie real-time spoken language and reading comprehension. He is also interested in the relationship between linguistic and various non-linguistic contexts.

Biography

Michael K. Tanenhaus grew up in New York and Iowa City. He was raised in a home conducive to academics and learning. His father was a political scientist and his mother always surrounded the family with books and literature. Tanenhaus's siblings include New York Times Book Review Editor Sam Tanenhaus, filmmaker Beth Tanenhaus Winsten, and legal historian David S. Tanenhaus. Tanenhaus obtained his Bachelor of Science from the University of Iowa in speech pathology and audiology after a brief stint at Antioch College. Tanenhaus received his Ph.D. from Columbia University in 1978. He immediately began teaching as an assistant professor, and then an associate professor at Wayne State University. Tanenhaus joined the faculty at the University of Rochester in 1983. He continues to be an involved researcher and faculty member who teaches courses on language processing and advises students. Since 2003 he has also been the director of the Center for Language Sciences. In 2018, he was awarded the David E. Rumelhart Prize by the Cognitive Science Society, the top prize in Cognitive Science.

Eye tracking

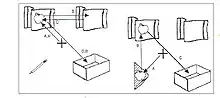

"While Tanenhaus was not the first to notice the connection between eye movements and attention, he and his team were the first to systematically record how the technology could be used to analyze language comprehension," notes Hauser (2004, p. 2). The MTanLab uses head-mounted eye-tracking devices, which can be worn like visors around the head. The apparatus tracks pupil and corneal reflections, and once calibrated, can accurately tell researchers where someone wearing the device is looking. Researchers use this information to make inferences about the subjects' cognitive processes, since shifts in gaze are related to shifts in attention. Just and Carpenter (1980) hypothesized that there is “no appreciable lag between what is fixated and what is processed (p. 331).” Thus the Visual World Paradigm can be used to understand the time-course of spoken language comprehension and the role visual context can play in language understanding.

Representative research

Integration of Visual and Linguistic Information in Spoken Language Comprehension

In this study Tanenhaus looked at visual context and its effects on language comprehension. Tanenhaus wanted to investigate whether comprehension of language is informationally encapsulated or modular, as thought by many theorists and researchers including Jerry Fodor.

Tanenhaus used eye-tracking software and hardware to record the movement of the subjects' eyes as they listened to phrases and manipulated objects in a scene. For critical stimuli, Tanenhaus used phrases that contained syntactic ambiguities, such as "Put the apple on the towel in the box," where the prepositional phrase "on the towel" is initially ambiguous between being a modifier (indicating which apple) or a goal (indicating where to put the apple). "Put the apple that's on the towel in the box" served as the control condition because "that's" signals that "on the towel" is unambiguously a modifier. Similar syntactic ambiguities had been used to provide evidence for modularity within syntactic processing. Tanenhaus speculated that a visual context could be just enough to influence the resolution of these ambiguities.

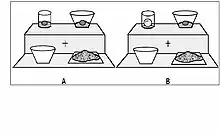

When the subject is presented with the first scene, in Figure A, they become confused. We see this by the many eye movements of the subjects who are not quite sure which items to manipulate. In the second scene the subject clearly understands the sentence more easily. In this scene the pencil is replaced by another apple on a napkin. This disambiguates the phrase because the subject understands that on the towel is modifying the apple, and is not referring to a destination.

The results strongly support the hypothesis that language comprehension, specifically at the syntactic level, is informed by visual information. This is a clearly non-modular result. These results also seem to support Just and Carpenter's “Strong Eye Mind Hypothesis” that rapid mental processes which make up the comprehension of spoken language can be observed by eye movements.

Actions and Affordances in Syntactic Ambiguity Resolution

Using a similar task to the previous study, Tanenhaus took this next method one step farther by not only monitoring eye movements, but also looking at properties of the candidates within the scenes. Subjects heard a sentence like “Pour the egg in the bowl over the flour,” “Pour the egg that’s in the bowl over the flour” was used as a control. The first scene, in Figure B, was called a "compatible competitor" because both eggs in this scene were in liquid form. During the phrase "in the bowl" the participants became confused as to which egg to use. In the next scene there was an "incompatible competitor" because one egg was solid and one egg was liquid. In this case it was much easier for the subject to choose which one was in a state in which it could be "poured". The results suggest that referents were assessed in terms of how compatible they were with the instructions. This supports the hypothesis that non-linguistic domain restrictions can influence syntactic ambiguity resolution. The participants applied situation specific, contextual properties to the way in which they followed these instructions. The results show that language is processed incrementally, as an utterance unfolds, and that visual information and context play a role in the processing.

Books

Tanenhaus has collaborated with others to edit two books. His first book “Lexical Ambiguity Resolution: Perspective from Psycholinguistics, Neuropsychology, and Artificial Intelligence” was published in 1988. This book contains eighteen original papers which look at the concept of Lexical Ambiguity Resolution. His most recent work “Approaches to Studying World- Situated Language Use: Bridging the Language and Product and Language as Action Traditions” was published in 2004. This book was published to show the importance of looking at both social and cognitive aspects when studying language processing. The book is made up of papers and reports of relevant experimental findings.

References

- Just, M. & Carpenter, P. (1980). A theory of reading: from eye fixation to comprehension. Psychology Review, 87, 329–354.

- Chambers, et al. (2004). Actions and Affordances in Syntactics Ambiguity Resolutions. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(3), 687–696.

- Hauser, Scott (2004). The Eyes Have It. Rochester Review.

- Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy (1995). Integration of Visual and Linguistic Information in Spoken Language Comprehension. Science, 268, 1632–1634.

- Tanenhaus, Michael - Faculty Website. University of Rochester.