Extremal optimization (EO) is an optimization heuristic inspired by the Bak–Sneppen model of self-organized criticality from the field of statistical physics. This heuristic was designed initially to address combinatorial optimization problems such as the travelling salesman problem and spin glasses, although the technique has been demonstrated to function in optimization domains.

Relation to self-organized criticality

Self-organized criticality (SOC) is a statistical physics concept to describe a class of dynamical systems that have a critical point as an attractor. Specifically, these are non-equilibrium systems that evolve through avalanches of change and dissipations that reach up to the highest scales of the system. SOC is said to govern the dynamics behind some natural systems that have these burst-like phenomena including landscape formation, earthquakes, evolution, and the granular dynamics of rice and sand piles. Of special interest here is the Bak–Sneppen model of SOC, which is able to describe evolution via punctuated equilibrium (extinction events) – thus modelling evolution as a self-organised critical process.

Relation to computational complexity

Another piece in the puzzle is work on computational complexity, specifically that critical points have been shown to exist in NP-complete problems, where near-optimum solutions are widely dispersed and separated by barriers in the search space causing local search algorithms to get stuck or severely hampered. It was the evolutionary self-organised criticality model by Bak and Sneppen and the observation of critical points in combinatorial optimisation problems that lead to the development of Extremal Optimization by Stefan Boettcher and Allon Percus.

The technique

EO was designed as a local search algorithm for combinatorial optimization problems. Unlike genetic algorithms, which work with a population of candidate solutions, EO evolves a single solution and makes local modifications to the worst components. This requires that a suitable representation be selected which permits individual solution components to be assigned a quality measure ("fitness"). This differs from holistic approaches such as ant colony optimization and evolutionary computation that assign equal-fitness to all components of a solution based upon their collective evaluation against an objective function. The algorithm is initialized with an initial solution, which can be constructed randomly, or derived from another search process.

The technique is a fine-grained search, and superficially resembles a hill climbing (local search) technique. A more detailed examination reveals some interesting principles, which may have applicability and even some similarity to broader population-based approaches (evolutionary computation and artificial immune system). The governing principle behind this algorithm is that of improvement through selectively removing low-quality components and replacing them with a randomly selected component. This is obviously at odds with genetic algorithms, the quintessential evolutionary computation algorithm that selects good solutions in an attempt to make better solutions.

The resulting dynamics of this simple principle is firstly a robust hill climbing search behaviour, and secondly a diversity mechanism that resembles that of multiple-restart search. Graphing holistic solution quality over time (algorithm iterations) shows periods of improvement followed by quality crashes (avalanche) very much in the manner as described by punctuated equilibrium. It is these crashes or dramatic jumps in the search space that permit the algorithm to escape local optima and differentiate this approach from other local search procedures. Although such punctuated-equilibrium behaviour can be "designed" or "hard-coded", it should be stressed that this is an emergent effect of the negative-component-selection principle fundamental to the algorithm.

EO has primarily been applied to combinatorial problems such as graph partitioning and the travelling salesman problem, as well as problems from statistical physics such as spin glasses.

Variations on the theme and applications

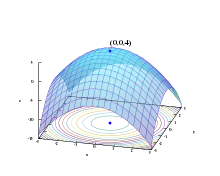

Generalised extremal optimization (GEO) was developed to operate on bit strings where component quality is determined by the absolute rate of change of the bit, or the bits contribution to holistic solution quality. This work includes application to standard function optimisation problems as well as engineering problem domains. Another similar extension to EO is Continuous Extremal Optimization (CEO).

EO has been applied to image rasterization as well as used as a local search after using ant colony optimization. EO has been used to identify structures in complex networks. EO has been used on a multiple target tracking problem. Finally, some work has been done on investigating the probability distribution used to control selection.

See also

References

- Bak, Per; Tang, Chao; Wiesenfeld, Kurt (1987-07-27). "Self-organized criticality: An explanation of the 1/fnoise". Physical Review Letters. American Physical Society (APS). 59 (4): 381–384. Bibcode:1987PhRvL..59..381B. doi:10.1103/physrevlett.59.381. ISSN 0031-9007. PMID 10035754. S2CID 7674321.

- Bak, Per; Sneppen, Kim (1993-12-13). "Punctuated equilibrium and criticality in a simple model of evolution". Physical Review Letters. American Physical Society (APS). 71 (24): 4083–4086. Bibcode:1993PhRvL..71.4083B. doi:10.1103/physrevlett.71.4083. ISSN 0031-9007. PMID 10055149.

- P Cheeseman, B Kanefsky, WM Taylor, "Where the really hard problems are", Proceedings of the 12th IJCAI, (1991)

- G Istrate, "Computational complexity and phase transitions", Proceedings. 15th Annual IEEE Conference on Computational Complexity, 104–115 (2000)

- Stefan Boettcher, Allon G. Percus, "Extremal Optimization: Methods derived from Co-Evolution", Proceedings of the Genetic and Evolutionary Computation Conference (1999)

- Boettcher, Stefan (1999-01-01). "Extremal optimization of graph partitioning at the percolation threshold". Journal of Physics A: Mathematical and General. IOP Publishing. 32 (28): 5201–5211. arXiv:cond-mat/9901353. Bibcode:1999JPhA...32.5201B. doi:10.1088/0305-4470/32/28/302. ISSN 0305-4470. S2CID 7925735.

- Boettcher, Stefan; Percus, Allon (2000), "Nature's way of optimizing", Artificial Intelligence, 119 (1–2): 275–286, arXiv:cond-mat/9901351, doi:10.1016/S0004-3702(00)00007-2, S2CID 7128022

- Boettcher, S. (2000). "Extremal optimization: heuristics via coevolutionary avalanches". Computing in Science & Engineering. Institute of Electrical and Electronics Engineers (IEEE). 2 (6): 75–82. arXiv:cond-mat/0006374. Bibcode:2000CSE.....2f..75B. doi:10.1109/5992.881710. ISSN 1521-9615. S2CID 7259036.

- Boettcher, Stefan; Percus, Allon G. (2001-06-04). "Optimization with Extremal Dynamics". Physical Review Letters. American Physical Society (APS). 86 (23): 5211–5214. arXiv:cond-mat/0010337. Bibcode:2001PhRvL..86.5211B. doi:10.1103/physrevlett.86.5211. ISSN 0031-9007. PMID 11384460. S2CID 3261749.

- Dall, Jesper; Sibani, Paolo (2001). "Faster Monte Carlo simulations at low temperatures. The waiting time method". Computer Physics Communications. 141 (2): 260–267. arXiv:cond-mat/0107475. Bibcode:2001CoPhC.141..260D. doi:10.1016/s0010-4655(01)00412-x. ISSN 0010-4655. S2CID 14585624.

- Boettcher, Stefan; Grigni, Michelangelo (2002-01-28). "Jamming model for the extremal optimization heuristic". Journal of Physics A: Mathematical and General. IOP Publishing. 35 (5): 1109–1123. arXiv:cond-mat/0110165. Bibcode:2002JPhA...35.1109B. doi:10.1088/0305-4470/35/5/301. ISSN 0305-4470. S2CID 640976.

- Souham Meshoul and Mohamed Batouche, "Robust Point Correspondence for Image Registration Using Optimization with Extremal Dynamics", Lecture Notes in Computer Science 2449, 330–337 (2002)

- Onody, Roberto N.; De Castro, Paulo A. (2003). "Self-Organized Criticality, Optimization and Biodiversity". International Journal of Modern Physics C. World Scientific Pub Co Pte Lt. 14 (7): 911–916. arXiv:cond-mat/0302260. Bibcode:2003IJMPC..14..911O. doi:10.1142/s0129183103005054. ISSN 0129-1831. S2CID 14553130.

- Boettcher, Stefan; Percus, Allon G. (2004-06-24). "Extremal optimization at the phase transition of the three-coloring problem". Physical Review E. American Physical Society (APS). 69 (6): 066703. arXiv:cond-mat/0402282. Bibcode:2004PhRvE..69f6703B. doi:10.1103/physreve.69.066703. ISSN 1539-3755. PMID 15244779. S2CID 3070942.

- Middleton, A. Alan (2004-05-14). "Improved extremal optimization for the Ising spin glass". Physical Review E. American Physical Society (APS). 69 (5): 055701(R). arXiv:cond-mat/0402295. Bibcode:2004PhRvE..69e5701M. doi:10.1103/physreve.69.055701. ISSN 1539-3755. PMID 15244875. S2CID 28439352.

- Heilmann, F; Hoffmann, K. H; Salamon, P (2004). "Best possible probability distribution over extremal optimization ranks". Europhysics Letters (EPL). IOP Publishing. 66 (3): 305–310. Bibcode:2004EL.....66..305H. doi:10.1209/epl/i2004-10011-3. ISSN 0295-5075. S2CID 250810936.

- Pontus Svenson, "Extremal optimization for sensor report pre-processing", Proc SPIE 5429, 162–171 (2004)

- Zhou, Tao; Bai, Wen-Jie; Cheng, Long-Jiu; Wang, Bing-Hong (2005-07-06). "Continuous extremal optimization for Lennard-Jones clusters". Physical Review E. American Physical Society (APS). 72 (1): 016702. arXiv:cond-mat/0411428. Bibcode:2005PhRvE..72a6702Z. doi:10.1103/physreve.72.016702. ISSN 1539-3755. PMID 16090129. S2CID 26578844.

- Duch, Jordi; Arenas, Alex (2005-08-24). "Community detection in complex networks using extremal optimization". Physical Review E. American Physical Society (APS). 72 (2): 027104. arXiv:cond-mat/0501368. Bibcode:2005PhRvE..72b7104D. doi:10.1103/physreve.72.027104. ISSN 1539-3755. PMID 16196754. S2CID 13898113.

- Ahmed, E.; Elettreby, M.F. (2006). "On combinatorial optimization motivated by biology". Applied Mathematics and Computation. Elsevier BV. 172 (1): 40–48. doi:10.1016/j.amc.2005.01.122. ISSN 0096-3003.

External links

- Stefan Boettcher – Physics Department, Emory University

- Allon Percus – Claremont Graduate University

- Global Optimization Algorithms – Theory and Application – – Thomas Weise